Clinical Scenario

John is early in his first year as a practicing audiologist and is currently working in a private-practice setting. During the previous 3 months, he has attended educational trainings provided by various hearing aid manufacturers. During some of these trainings, the presenter was persuasive in recommending frequency-lowering technology (i.e., technology that lowers information from the high- frequency region to a lower-frequency region) for amplifying high-frequency sensorineural hearing loss. In other sessions, however, extended-bandwidth amplification (i.e., amplification with a wide spectral bandwidth out to approximately 8–10 kHz) was recommended as the standard of care for providing audibility of high-frequency information. John is conducting a hearing aid evaluation on Mr. Smith, a 50-year-old patient who has a bilateral, mild sloping to moderately severe sensorineural hearing loss (SNHL). Mr. Smith’s otologic history is unremarkable and he has never used amplification. Mr. Smith reported recently experiencing a mild cerebrovascular accident (CVA) and is scheduled to begin speech-language therapy next week to address mild speech-production difficulties. Thus, one of John’s goals is to maximize Mr. Smith’s speech-recognition abilities to ease communication and facilitate maximum audibility of the speech signal during the therapy sessions. John does not yet know which amplification strategy to use to maximize speech recognition for Mr. Smith and has decided to conduct a review of the literature to help guide his decision.

Background Information

Currently, there is a lack of agreement regarding the best way to provide audibility of high-frequency speech information through amplification devices, particularly for individuals like Mr. Smith who have moderate to moderately severe high-frequency thresholds. Some propose extending the spectral bandwidth of amplification (i.e., including additional high-frequency information) to improve speech-recognition performance. Others, conversely, recommend moving speech information from the high-frequency region to lower-frequency regions through various signal-processing techniques (for specific details see Alexander, 2013). Interest in these signal- processing techniques resulted from the potential limitations of traditional amplification for high-frequency hearing loss (Alexander, 2013). For example, prior to widespread use of digital feedback-suppression algorithms in amplification devices, providing gain in the high-frequency region was a significant clinical challenge.

The literature is clear on the fact that providing access to and audibility of the high-frequency region of the speech signal is beneficial for listeners of all ages (Füllgrabe, Baer, Stone, & Moore, 2010; Monson, Hunter, Lotto, & Story, 2014; Pittman, 2008; Stelmachowicz, Lewis, Choi, & Hoover, 2007). For example, Pittman (2008) found improvements in novel word learning among children with normal hearing and children with hearing impairment when making more high-frequency information audible (i.e., extending the speech-signal bandwidth). For adults, Moore, Füllgrabe, and Stone (2010) found that including additional high-frequency speech energy (above 5 kHz) increased listener judgments of sound quality, with signals containing energy up to 10 kHz described as having improved “clarity” and “pleasantness.” The authors also found a small, but significant, improvement in speech recognition when extending the spectral bandwidth (i.e., including additional high-frequency energy) to 7.5 kHz when the speech signal and noise were presented from different locations.

Clinical audiologists should strive to provide appropriate amplification that maximizes speech understanding and sound quality in all listening environments. Similarly, speech-language pathologists should understand the effectiveness and outcomes associated with amplification systems for patients with hearing loss.

Clinical Question

John undertook the current review to explore the literature on the two approaches to improving speech recognition for patients with hearing loss. John used the PICO (population, intervention, comparison, outcome) format, as recommended by the American Speech-Language-Hearing Association (AHSA), to formulate his clinical question: For adults with mild sloping to moderately severe SNHL (P), is frequency-lowering processing (I) or extended-bandwidth processing (C) preferable in improving speech recognition performance (O) in quiet and noisy listening environments?

Search for the Evidence

Because John had less than a week to provide a solution to Mr. Smith, he used the Quick Review Response (QRR) method described by Larsen and Nye (2010). According to the authors, this technique expedites information retrieval, data extraction, and analysis to provide preliminary answers to questions using methods commonly used in a full systematic review. Limitations of this method include: 1) sources of information retrieval may be limited, and 2) data analysis focuses solely on primary outcomes and ignores moderating variables that would allow for a deeper understanding of the outcomes (Larsen & Nye, 2010). Given the time constraints, John deemed this technique appropriate for the clinical decision at hand.

Inclusion Criteria

The primary criterion for inclusion in the analysis was peer review. John was aware that this criterion would exclude white papers written by hearing aid manufacturers, research articles from trade journals, and unpublished doctoral dissertations. Since the patient was an adult male, articles referencing work specifically with children in the title or abstract were excluded. The dependent variable was limited to some form of speech identification (e.g., phonemes, words, sentences) and not to the detection of specific phonemes/morphemes (e.g., detection of the presence or absence of signals from the fricative class of phonemes as in the Plurals Test). John limited the dependent variable to examine the effects of various audible bandwidths and signal-processing techniques on the linguistic processing of the speech signal rather than simply phonemic detectability. For the purposes of this review, the term extended-bandwidth processing refers to instances where additional high-frequency energy (i.e., above approximately 4 to 5 kHz) is included in the speech signal through traditional processing (often carried out via low- pass filtering). Frequency lowering refers to conditions where the high-frequency portion of the speech signal is lowered, in some way, to a lower-frequency region. John understands that the speech signals used in the cited studies frequently underwent several forms of processing (e.g., low-pass filtering, frequency compression, frequency transposition, frequency translation) to accomplish the objective of the study. Keeping this in mind, the primary goal of his review was to examine, from a broad perspective, the effectiveness of these two signal-processing techniques for providing audibility of high-frequency speech energy.

Additionally, study participants were required to have sensorineural hearing loss and mean thresholds in the mild to moderately severe range in a sloping configuration. Specifically, hearing thresholds must fall within the normal/ mild hearing loss range in the low-frequency region (i.e., below 1000 Hz) and slope to a moderate or moderately severe loss in the high-frequency region. Studies using participants with various degrees of hearing impairment were included only when John could separately analyze the results that were obtained from listeners with hearing thresholds comparable to his patient. This meant including studies where listeners had lesser or greater degrees of hearing loss than his patient, but John believed that only including studies where participants had precisely the same degree of hearing loss would not provide sufficient information from which to make an informed clinical decision.

Search Strategy

John searched for relevant studies using EBSCO, PubMed, Google Scholar, as well as journals from the American Speech-Language-Hearing Association (ASHA) and American Academy of Audiology (AAA). The following keywords were used in isolation and in combination:

- Mild-to-moderately severe

- High-frequency

- Hearing loss

- Amplification

- Frequency lowering

- Extended bandwidth

Information Retrieval

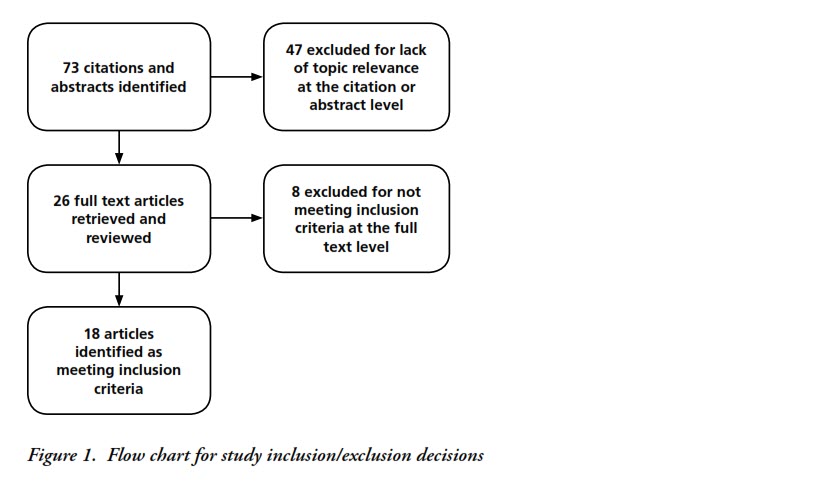

Seventy-three articles were retrieved based on a review of the abstract and manuscript title. Of these, twenty-six were retained for additional review. Additional analyses yielded 18 articles that met the inclusion criteria of this review. Figure 1 provides a summary of the studies identified and those included or excluded at each step during the retrieval process. Specific details of each study are provided in Tables 1 and 2. The former contains studies conducted within the previous 10 years (i.e., 2006 through 2016), while the latter contains information from studies older than 10 years. John’s discovered that many studies required listeners to identify speech in both quiet and noisy listening conditions. Therefore, to gain a thorough understanding of the data, his analysis focused on each listening condition individually.

Evaluating the Evidence

Summary of Results in Noise

A majority of the studies required listeners to identify the speech signal (e.g., phonemes, words, sentences) in listening conditions with competing background noise. Considering the cumulative results from all studies, extending the audible bandwidth to include more high-frequency information appears to enhance speech recognition for hearing-impaired listeners with hearing loss similar to John’s patient, particularly in noisy listening conditions (e.g., Cox, Johnson, & Alexander, 2012; Pepler, Lewis, & Munro, 2106).

Results using frequency-lowering techniques by hearing-impaired listeners with comparable hearing sensitivity seem less promising. For example, Alexander, Kopun, and Stelmachowicz (2014) studied consonant identification in speech-shaped noise recorded through two clinically fit hearing aids (Widex® InteoTM IN-9 BTE and Phonak NaídaTM V). Results showed that while the nonlinear frequency compression (NFC) lowering technique improved recognition of phonemes from the fricative/ affricate class, the frequency-transposition technique used in the study degraded consonant identification in noise. Additionally, Miller, Bates, and Brennan (2016) found that listeners with gradually sloping hearing losses did not show significant improvements in speech recognition from signals processed through three commercially available hearing aids equipped with frequency-lowering technology. In fact, significantly poorer sentence-recognition thresholds were found with activated frequency-lowering techniques (i.e., linear frequency transposition and frequency translation) as compared to inactive. With NFC active, sentence- recognition performance did not improve or decrease. The authors suggested that clinicians should take caution when activating frequency lowering for this population (i.e., listeners with gradually sloping hearing loss), as it may degrade speech recognition in noise for some listeners.

John also examined studies that provided high-frequency information through processing strategies that included additional high-frequency signal amplification. A recent study by Pepler, Lewis, and Munro (2016) examined consonant identification among 36 listeners with hearing impairment (18 with cochlear dead regions and 18 without dead regions). Listeners without cochlear dead regions showed significant improvements in consonant identification when including additional high-frequency speech energy. The authors reported that there is no evidence to support limiting high-frequency amplification for adults with at least a moderate hearing loss, particularly in noisy environments. Additionally, Levy, Freed, Nilsson, Moore, and Puria (2015) obtained sentence reception thresholds among normal-hearing and hearing-impaired listeners with a mild to moderately severe sensorineural hearing loss. Sentence reception thresholds significantly improved when extending the signal bandwidth from 4 kHz to 10 kHz when the speech and noise were separated in location. The authors suggested that increasing audibility of high-frequency spectral energy may improve speech-recognition performance in the presence of spatially separated noise.

Summary of Results in Quiet

Upon review of the retained articles, John found less evidence to support including additional high-frequency speech information in quiet listening conditions. For example, Amos and Humes (2007) found a small but significant improvement in final-consonant recognition for elderly hearing-impaired listeners when increasing audibility of this region. Horwitz, Ahlstrom, and Dubno (2008) found similar results when increasing the low-pass filter cutoff to include additional high-frequency spectral detail. Conversely, however, Turner and Cummings (1999) and Hogan and Turner (1998) found the use of high-frequency spectral information to have an adverse effect on speech recognition in quiet when audiometric thresholds exceeded 55 dB HL. The authors advised caution when providing a gain above 4 kHz when thresholds fall within this hearing loss range and noted the need for verification measures to determine the utility of amplifying the high-frequency region.

Souza, Arehart, Kates, Croghan, and Gehani (2013) examined how frequency lowering using a form of frequency compression influenced speech intelligibility for adults with mild to moderate hearing loss. In quiet, no benefit in speech recognition was observed with the frequency-lowered speech when compared to unaltered speech. The authors suggested that listeners with the poorest high-frequency thresholds benefited the most from the frequency-lowering technique used in their study, but individuals with hearing loss similar to John’s patient showed no benefit. All studies considered, John determined that there was limited evidence to support increasing access to high-frequency speech energy in quiet conditions for listeners with hearing loss similar to his patient.

Summary of Evidence

John also examined the quality of the evidence identified during his search. Using criteria described in Cox (2005), all of the studies were a minimum of level III (i.e., well-designed, nonrandomized quasi-experimental studies), which is considered to be a moderate level of evidence. Following a detailed review of all evidence obtained in his search, John determined that there is a need for randomized controlled experimental studies that directly compare speech recognition outcomes in quiet and in noise using each signal-processing technique. Many of the studies used simulated hearing aid processing or speech processed through computer programs and did not subject the speech signal to processing through clinically fit hearing aids. Although additional variables may complicate study design, studies using clinically available hearing aids that offer extended-bandwidth and frequency-lowering technologies can help inform clinical decision making regarding amplification of the high-frequency portion of the speech spectrum.

The Evidence-Based Decision

For Mr. Smith, it appears that John should begin with amplifying high-frequency speech energy through extended-bandwidth processing, keeping in mind the goal of improving speech-recognition performance in noisy listening environments. It is long established that many hearing aid users report poorer performance and dissatisfaction with their devices in degraded environments. In a recent MarkeTrak study, Abrams and Kihm (2015) reported that between 30 and 50% of respondents described substantial difficulty when attempting to recognize speech in noise. Given the data obtained in this Brief, the strongest evidence supports amplifying high-frequency speech energy through signal processing that includes as much unaltered high-frequency information as possible compared to techniques that artificially lower high-frequency spectral information. That said, audiologists should always verify hearing aid function through real-ear and aided speech- recognition measures and validate patient satisfaction in all listening environments. If John concludes that the current technique is unsuccessful at improving Mr. Smith’s speech-recognition abilities, he should explore and offer additional signal-processing techniques to his patient. Souza et al. (2013) suggested that the use of high-frequency amplification techniques should be viewed in terms of the “audibility-by-distortion trade off ” (p. 1354), which may vary substantially among listeners. As such, audiologists should consider each case individually and adjust the prescribed amplification technique accordingly.

When interpreting these results, John must acknowledge the lack of randomized controlled studies that directly examine speech-recognition performance between signal-processing techniques for amplifying the high- frequency portion of the speech spectrum. Clinicians would benefit from additional studies using currently available hearing aids that offer both signal-processing techniques (i.e., extended-bandwidth processing and frequency lowering). Such studies would inform clinical decision making for hearing healthcare providers when fitting amplification devices for listeners who require amplification of the high-frequency auditory region.