From the Desk of Ann Kummer

According to the National Institute of Deafness and Other Communication Disorders (NIDCD), approximately 15% of American adults (about 37.5 million) report some difficulty hearing and about 28.8 million of those adults could benefit from wearing hearing aids. Presbycusis (an age-related form of sensorineural hearing loss) is particularly common in individuals over the age of 60. Unfortunately for me, I am one of those individuals! As I was recently being fitted for hearing aids by my audiologist colleague, it was clear to me how little I know about hearing aids, despite my audiology training several decades ago (LOL!). Actually, I suspect that most speech-language pathologists, particularly those who graduated a few years ago, could benefit from a tutorial on hearing aids as well. Therefore, I am particularly excited about this 20Q from Dr. Gustav (“Gus”) Mueller!

Dr. H. Gustav Mueller holds faculty positions with Vanderbilt University, the University of Northern Colorado, and Rush University. Dr. Mueller is a Founder of the American Academy of Audiology and a Fellow of the American Speech and Hearing Association. He has co-authored over ten books on hearing aids and hearing aid fitting, including the recent Hearing Aids for Speech-Language Pathologists. Gus is the co-founder of the popular website www.earTunes.com. Gus is my audiology counterpart as he is the Contributing Editor for AudiologyOnline, where he has the monthly column “20Q With Gus.” (He taught me all the tricks to this trade.) Finally, he has a private consulting practice on the tropic (his description) North Dakota island, just outside the city of Bismarck. Check it out at http://www.gusmueller.net/fox-island-audiology-north-dakota-nd.htm

In this 20Q article, Dr. Mueller will answer common questions about modern hearing aids and provides a description of new and innovative technology. In addition, he discusses strategies that are used to obtain an optimum fitting.

Now…read on, learn, and enjoy!

Ann W. Kummer, PhD, CCC-SLP, FASHA, 2017 ASHA Honors

Contributing Editor

Browse the complete collection of 20Q with Ann Kummer CEU articles at www.speechpathology.com/20Q

20Q: Modern Hearing Aids - A Primer for Speech-Language Pathologists

Learning Outcomes

After this course, readers will be able to:

- Describe clinical tests that are used to evaluate the hearing aid candidate.

- Describe the patient benefit expected from advanced hearing aid technology.

- Describe the evaluation and verification process for the fitting of modern hearing aids.

Gus Mueller

Gus Mueller1. I recently saw that you wrote a book on hearing aids that is geared toward speech-language pathologists?

Right, along with my colleague Lindsey Jorgensen. To be honest, we weren’t too sure what you all need to know about hearing aids, or what you want to know about hearing aids, so we included a little bit of everything. To make it a more general audiology reference, we even added chapters on diagnostic testing, auditory pathologies, implantable devices, and rehabilitative audiology.

I know your interest at the moment lies with hearing aids, so we’ll stick with that. It’s always fun to talk about the latest technology, but I do need to emphasize that there is a systematic fitting process entailing several steps—it’s a lot more than the instruments themselves. Both the ASHA and the AAA have detailed step-by-step guidelines regarding the selection and fitting of hearing aids. In general, they go something like this:

- Candidacy and needs assessment

- Selection of product and features

- Individualizing and verification of the fitting

- Orientation and counseling

- Real-world outcome measures

So, a reasonable starting point for our discussion would be determination of candidacy, which for the most part is based on the pure-tone audiogram. Okay with you?

2. Sounds good. I assume the patient has to have a hearing loss?

That is usually true, yes. It would be uncommon to fit hearing aids to someone whose thresholds were no worse than 20 dB HL. What has changed somewhat over the past decade or two is the fitting of hearing aids to someone who has normal hearing through 2000 Hz, and only a mild loss in the 3000-6000 Hz range—I’m referring to a loss of only 25-40 dB. This is partly because of improvements in technology (feedback reduction circuits in particular), but also I think because audiologists have a greater appreciation of how these higher frequencies contribute to speech understanding.

3. You’re referring to the speech banana?

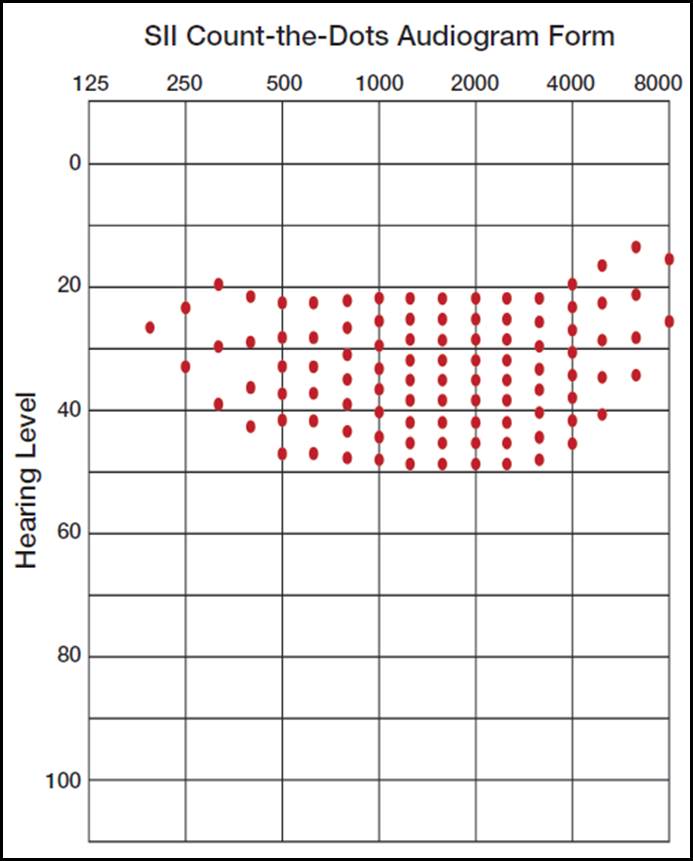

Yes, the long term average speech spectrum (LTASS) which is fondly referred to as the “speech banana,” as it sort of resembles a banana when it is converted from SPL to HL. You’ve maybe noticed that it is fairly common to place the speech banana on the audiogram and use this for counseling. There have been several studies of average speech over the years, and LTASS findings do vary slightly from study to study, but they are more or less are in pretty good general agreement. In the mid-frequencies, the top of the banana should be around 20 dB HL and the bottom should be around 50 dB HL. But be on the lookout for “bad bananas.”

4. What’s a bad banana? I would assume that an LTASS is an LTASS is an LTASS?

You would think so, but unfortunately, many clinics have found a way to mess it up. While you were asking that question, I just did a Google Image search on “speech banana,” and found some that rather than going from the correct 20 dB to 50 dB HL, they went from 35 to 65 dB HL. If this is used for counseling or treatment decisions (and it probably will be), it can have huge consequences. Imagine if you took your 3-year-old son in for testing, and the audiometric results revealed that he had a flat 35 dB loss. If he was tested at Clinic A, where they used the correct 20 to 50 dB HL banana, you would be told that your son was not hearing about two-thirds of the important sounds of average speech. On the other hand, if you had gone to Clinic B, where the audiologists use the 35 to 65 dB speech banana, you would be told that all is well—it would appear that he is hearing 100% of average speech! And for adults, you can see how this would have an impact regarding determination of the need for amplification.

I’ve included my favorite LTASS audiogram in Figure 1. It is not copyrighted, so click, paste, copy, and use as much as you want for patient counseling.

Figure 1. The SII 2010 Count-The-Dots audiogram from Killion and Mueller modified from the 1990 Mueller and Killion AI form. By plotting an audiogram, an estimate of the patient’s Speech Intelligibility Index (SII) can be obtained by counting the audible dots. Note that the vertical density of the dots varies relative to the importance function of each frequency.

5. What’s the purpose of the dots?

Oh yes, the dots. They are rather important. I’m sure you’re aware that the different frequencies of speech have different importance functions. This then relates to the original articulation index (AI) from the 1940s, which has been modified and now is termed the Speech Intelligibility Index or SII. When Mead Killion and I designed the chart in Figure 1, we placed 100 dots to roughly correspond to the importance function of speech—frequencies with more importance have a greater density of the dots. Once you plot an audiogram, all you have to do is count the audible dots (the ones above the audiogram), and you have the patient’s SII—the percent of average speech that is audible for that individual. This can be used for counseling (“You are only hearing 50% of speech!”), to help determine hearing aid candidacy, and can be compared to the aided SII at the time of the hearing aid fitting to determine benefit from amplification. Importantly, the SII is not a speech recognition score, but you can use SII values to predict speech understanding.

6. On most audiograms, I see that the audiologist already has conducted speech testing. Why can’t you just use those values to help determine hearing aid candidacy?

True, most routine audiologic exams include testing using monosyllable words in quiet. The material typically is the Northwestern University Test #6 (NU-6), presented from standardized recordings. The 50 words that are presented are scored in percent correct and referred to as a speech recognition score (incorrectly called speech discrimination prior to 1980). There is a good reason, however, why this score has minimal use in the fitting of hearing aids. The purpose of this testing is to determine the patient’s optimum ability to correctly recognize the words of the test, and for this reason, we want to ensure that the words are as audible as possible. This testing normally is conducted at 75 or 80 dB HL, which would be 90 dB SPL or higher—this is very loud speech, just below the patient’s loudness discomfort level (LDL). Average-level conversational speech is around 60 dB SPL (45-50 HL), a much softer level. For this reason, it is common that someone who is an excellent candidate for hearing aids has an NU-6 speech recognition score of 90% or higher, simply because when we make speech audible, they score well. There are cases, of course, that even when we do make the speech audible, the scores are still very low, and this is one of the indicators that a cochlear implant should be considered.

7. Many of the older patients I talk to who have hearing loss say that they only have trouble in background noise. Is speech-in-noise testing also part of the pre-fitting test battery?

You make a good point. Speech-in-noise testing indeed is a component of best practice guidelines for hearing aid fitting and is conducted by audiologists who follow these guidelines. It can be very useful for hearing aid selection, determining the need for assistive listening devices, and post-fitting counseling. There is a very poor correlation between the patient’s score for words in quiet vs. their performance for speech understanding in background noise. A patient, who scores 100% in quiet, may fail miserably when background noise is introduced. This is something we need to know before fitting hearing aids.

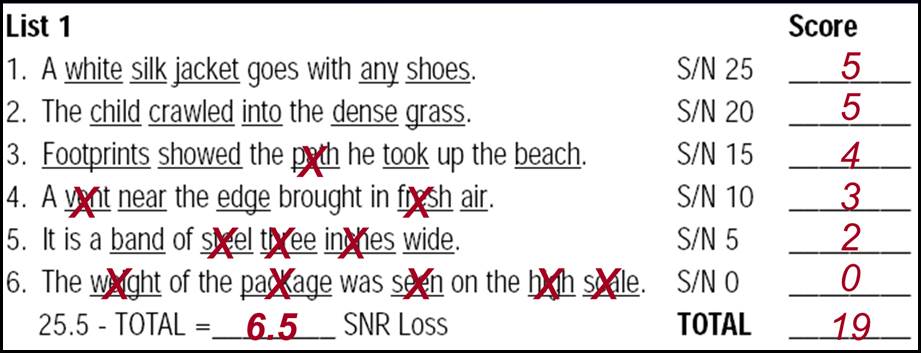

There are several standardized speech-in-noise tests available. There is no reason that you need to be an expert regarding their use, but let me talk a little about the most common of these tests, as you might see it mentioned in reports. The first one is the Quick Speech-in-Noise test, referred to as the QuickSIN. Each list has six sentences, and each sentence has five keywords that are scored. Each sentence is recorded at a different signal-to-noise ratio (SNR), beginning with +25 SNR (essentially listening in quiet) and becoming more difficult in 5 dB steps until the final sentence, which is recorded at 0 dB SNR (difficult even for people with normal hearing). The test is then scored in SNR-Loss—that is, the dB value that the patient scores poorer than the norms for people with normal hearing. For example, if a given patient had a QuickSIN SNR-Loss of 10 dB, that would mean that when he goes out to a restaurant, for him to perform as someone with normal hearing, he would have to turn the noise down by 10 dB, or his companion would have to talk 10 dB louder. This is a perfect example of why the speech-in-noise unaided performance is important for patient counseling. An example of this test is shown in Figure 2 for a patient who had a 6.5 SNR Loss.

Figure 2. Sample scoring for the QuickSIN for one list of six sentences (30 words). Normally two lists would be used for each ear and the SNR Loss would be averaged. The “X” indicates the words that the patient missed, and the total correct for each sentence is marked in the column to the right. The number of correct words is then totaled (19 in this example) and then subtracted from the normative score (25.5) which then gives this patient a 6.5 dB SNR-Loss. That is, to perform similar to someone with normal hearing, the background noise would have to be 6.5 dB softer.

8. Anything else regarding pre-fitting testing?

I should mention that we have several pre-fitting self-assessment scales that are used to help understand the patient’s problems and guide them through the selection and fitting process. There are scales related to disability, handicap, expectations, motivation, listening needs, personality, and other areas of concern. If you’re interested, we reviewed a dozen or more of these self-assessment scales in a book chapter a few years back (Mueller, Ricketts & Bentler, 2014).

One more thing about the importance of speech-in-noise testing. In recent years you probably have seen articles related to something called “hidden hearing loss,” which many have attributed to cochlear synaptopathy (Kujawa, 2017). These are typically people who complain of problems understanding speech, especially in background noise, but have normal pure-tone thresholds. These, of course, could also be cases of central auditory dysfunction, unrelated to cochlear synaptopathy. Regardless of the exact etiology, my point here is that maybe these hearing losses are only “hidden” because the patients are not receiving a complete test battery which includes tests like the QuickSIN. Earlier, we discussed that we normally would not fit hearing aids to someone with normal hearing, but there has been some success using hearing aids with this unique group of patients.

9. So after all that pre-fitting testing, it must be time to select the product? That’s what I’ve been waiting to hear about.

Okay—let’s jump into it. First, in case you haven’t noticed, it has now become routine to fit most everyone bilaterally if they have an aidable hearing loss in both ears. Bilateral, rather than unilateral fittings, of course, has always made sense from a hearing science standpoint, and it makes even more sense today because many of the hearing aid features involve the hearing aids talking to each other. With only one, that feature isn’t available. And by the way, the preferred terms for hearing aid fittings are bilateral and unilateral, not binaural and monaural. That’s because we do know that there is a hearing aid on each ear (bilateral), but we don’t know what is going on in the low brainstem (binaural).

Regarding all the different features that are available today, audiologists who dispense hearing aids tend to group their products into tiers. That is, an entry-level Tier I product might just have the more basic features, a Tier II product has some added features and then the Tier III premier product is loaded with all the features available. Working with the patient, it is then determined what features are needed, which will drive the fitting to a given Tier, and also determine the price. If the audiologist uses a bundled pricing structure (cost of instrument, the fitting and all post-fitting services pre-paid), the difference between a pair of entry-level products and a pair of premier hearing aids can be several thousand dollars.

If you haven’t paid much attention to hearing aids lately, one thing that has significantly changed is the most popular style. Back in the mid-to-late 90s, custom in-the-ear (ITE) hearing aids, mostly completely-in-canal (CIC) models, made up 75-80% of hearing aids sold in the U.S. Behind-the-ear (BTE) hearing aids were considered old fashioned, and were typically only used when the hearing loss was more severe, or for the pediatric population. That has now turned upside down, with BTE products having an ~80% market share. But these are not your grandfather’s BTEs! What has driven the move to today’s BTEs?

- Advanced feedback cancellation algorithms made “open” fittings possible for a wide range of patients (i.e., open meaning that the ear canal is only partially closed with an ear tip, allowing for sounds to travel directly to the eardrum, and making the patient’s own voice sound more normal). This is difficult to accomplish with an ITE or CIC product.

- The receiver was removed from the hearing aid and attached by a thin wire, placed in the ear canal. These are referred to as receiver-in-canal (RIC) BTE products, or simply called RICs (pronounced “Ricks”). Removing the receiver from the case allowed for smaller and more interesting BTE case designs.

- The thin wire leading to the receiver is encased in a very thin tube, much smaller than the tubes of yesteryear, and barely visible, even to the trained audiologist eye (and you can bet we are checking out famous people during TV appearances to see who is using hearing aids!). The thin wire/tube and the tiny hearing aid behind the ear make the mini-BTE RIC cosmetically acceptable for most patients.

- Introduced about the same time as the RIC, was instant-fit ear couplings, ranging from very open to tight fittings (called tips or domes, the tighter fitting ones termed closed-domes or double-domes). As the name indicates, these are ear tips that come in a variety of sizes that snap on the receiver—no ear impression is needed, and indeed they are instant. They are less visible and more comfortable than standard earmolds, and pair well with the RIC hearing aids that also can be stocked by the audiologist in advance. This means that a patient can walk in the door planning on simply having a diagnostic exam, and walk out an hour or two later owning a pair of new hearing aids.

While this all sounds good, and is popular, there are drawbacks. The ear canal is a hostile environment for an electronic component such as a receiver. A plugged/dead receiver has become the number one repair item for audiologists, and for larger practices, it is not uncommon to have 2-3 patients a day walk in with this problem (especially on Fridays around closing time). A second problem is that patients frequently do not insert the instant-fit tips deep enough, which reduces gain and sound quality. And finally, the instant fit tips do not serve as a dependable “anchor” for the BTE; they are much poorer than what we were accustomed to in the past with custom earmolds. Combine the retention issue with very small and light BTE products, to no one’s surprise we are seeing more lost hearing aids. For these reasons, many audiologists have abandoned the instant-fit earmolds, and have gone back to small custom ear couplings, but the instant fit tips remain popular—maybe 50% of the RIC products dispensed.

10. Let’s talk specifically about features. I’ve heard a lot about hearing aid “noise reduction”. What’s the straight scoop? Does it really work?

Yes, but probably not to the extent that you have heard. Here is the deal. The most common noise reduction algorithm and the one that has the greatest effect is based on modulation detection and analysis. When the signal classification system detects modulations that resemble speech (4-6 modulations/second), no change in processing occurs. When modulations in the input increase, and it is determined that the dominant signal is noise, then gain is reduced (5-10 dB; varies from product to product and can be changed with programming). This analysis occurs independently in each channel, so in a 48-channel instrument, gain could be reduced in some of the channels (usually the lower frequencies) and not in others. The key point here is that it’s a gain reduction scheme, meaning that speech as well as noise in that given channel will be reduced equally. The signal-to-noise ratio is not improved, and therefore we would not expect speech understanding to improve. In fact, that is what numerous studies have found (see Ricketts, Bentler and Mueller, 2019 for review). It has been shown, however, that the noise reduction algorithm does provide more relaxed listening and reduces listening effort. There also seems to be some benefit when demanding cognitive tasks are present. Indirectly, therefore, there may be some real-world benefit for speech intelligibility that doesn’t appear in abbreviated laboratory measures. There doesn’t seem to be any serious downside to using noise reduction, so most audiologists activate it for all their patients—it is a standard feature on nearly all hearing aids.

11. What about directional hearing aids? They are also reported to reduce background noise, right?

Correct. Directional technology is much better at reducing background noise than noise reduction algorithms. More on that in a minute, but first I want to say that at one time, “directional microphone hearing aids” were a big deal. Not so anymore. Nearly all hearing aids dispensed today have the capability for directional processing—it just comes along for the ride. Also ironically, they no longer employ directional microphones. The directional effect is accomplished through digital processing using the input from two omnidirectional microphones (yes, nearly all hearing aids have two microphones, even most of the custom products).

As you probably know, the reason that directional technology can improve the SNR is that the output of the hearing aid can be altered as a function of its azimuth of origin. With traditional directional products, we assume that the user is facing the speech that he wants to hear, and therefore, sounds from the rear hemisphere are attenuated. Yes, speech as well as noise from the back will be reduced, but we assume that this is not the speech that the listener wants to hear. This then does have the effect of improving the SNR (~3 to 5 dB), and in turn, speech recognition. The benefit, however, will be reduced as the distance from the desired talker increases, as a function of room reverberation and the “openness” of the fitting.

Directional hearing aids have been around for 50 years, but a lot has happened fairly recently that has enhanced their benefit and made them more adaptable:

- The signal classification system, analyzing the overall SPL and spectrum of the input signal, predicts if the user will benefit from directional technology, and automatically turns it on and off accordingly.

- The signal classification system does a 360-degree search looking for dominant speech. If there is noise present, and a dominant speech signal is found from any direction (including sides and back), directionality will focus on that azimuth, with attenuation applied to sounds from other azimuths. The user no longer needs to look at the talker. This can be especially helpful for someone driving a car and talking to someone in the backseat.

- By using full audio data sharing from the right and left hearing aids (four microphones) it’s possible to develop a narrow focus for the directionality (+/- 45 degree of look-azimuth), which means considerably more attenuation for background sounds in the front hemisphere. This is useful for a noisy restaurant, where the patient is more or less surrounded by noise but is able to look directly at the person speaking.

12. Sounds like hearing aids are a lot smarter than when I first learned about them. What other features are new?

The “smartness” is mostly determined by the accuracy of the signal classification system, not the feature itself. Fortunately, these classification systems “get-it-right” most of the time, and make the same decision that an experienced user would make. One thing, however, hasn’t changed over the years, and that’s the importance of appropriate high-fidelity audibility for inputs of all levels. That’s responsible for 80-90% of the success of the fitting—everything else gets stacked on top of that. With that said, here are some relatively new features that you might find interesting:

- Direct streaming for telephone calls and music from smartphone (iPhone or Android).

- Tele-audiology: By using the Internet, a smartphone app, and compatible hearing aid fitting software, hearing aids can now be programmed remotely. A hearing aid user, sitting in his living room can text his audiologist that he is having acoustic feedback issues. The audiologist, sitting in her living room, can remotely turn down gain in the highs until the feedback disappears. This feature of course has been very helpful during the COVID-19 pandemic.

- Movement detection: Using miniature accelerometers on the hearing aid chip, the hearing aids can detect when the patient is walking vs. stationary. The signal detection system assumes that when we are walking, we want to hear sounds from all around, and automatically will switch from directional processing to omnidirectional.

- Own voice analysis: Following less than a minute of training, the hearing aid learns the user’s voice, and changes signal processing whenever he or she talks (e.g., hearing aids are programmed to optimize loudness for average speech, but the user’s own voice typically is louder than average speech at the hearing aid microphone).

- Find lost hearing aid: Not only does your smartphone know where you are, and the locations of your Uber driver and favorite restaurants, it also can find your hearing aid! Once the hearing aids have been paired to the patients’ smartphone, and the app notices the hearing aids nearby, it will show patients if they are getting closer or further away, using the Bluetooth radar mechanism. We recently published a vignette about a rancher who found his lost hearing aid in a 160 acre pasture using this feature (Mueller and Jorgensen, 2020).

I could go on and on, but rather I have put together a listing of some of the more common features in a summary Table (click here for Table 1). Not all manufacturers and products have all features, but most products have most features.

13. All pretty amazing. Earlier you mentioned the importance of audibility. How do audiologists ensure that that happens?

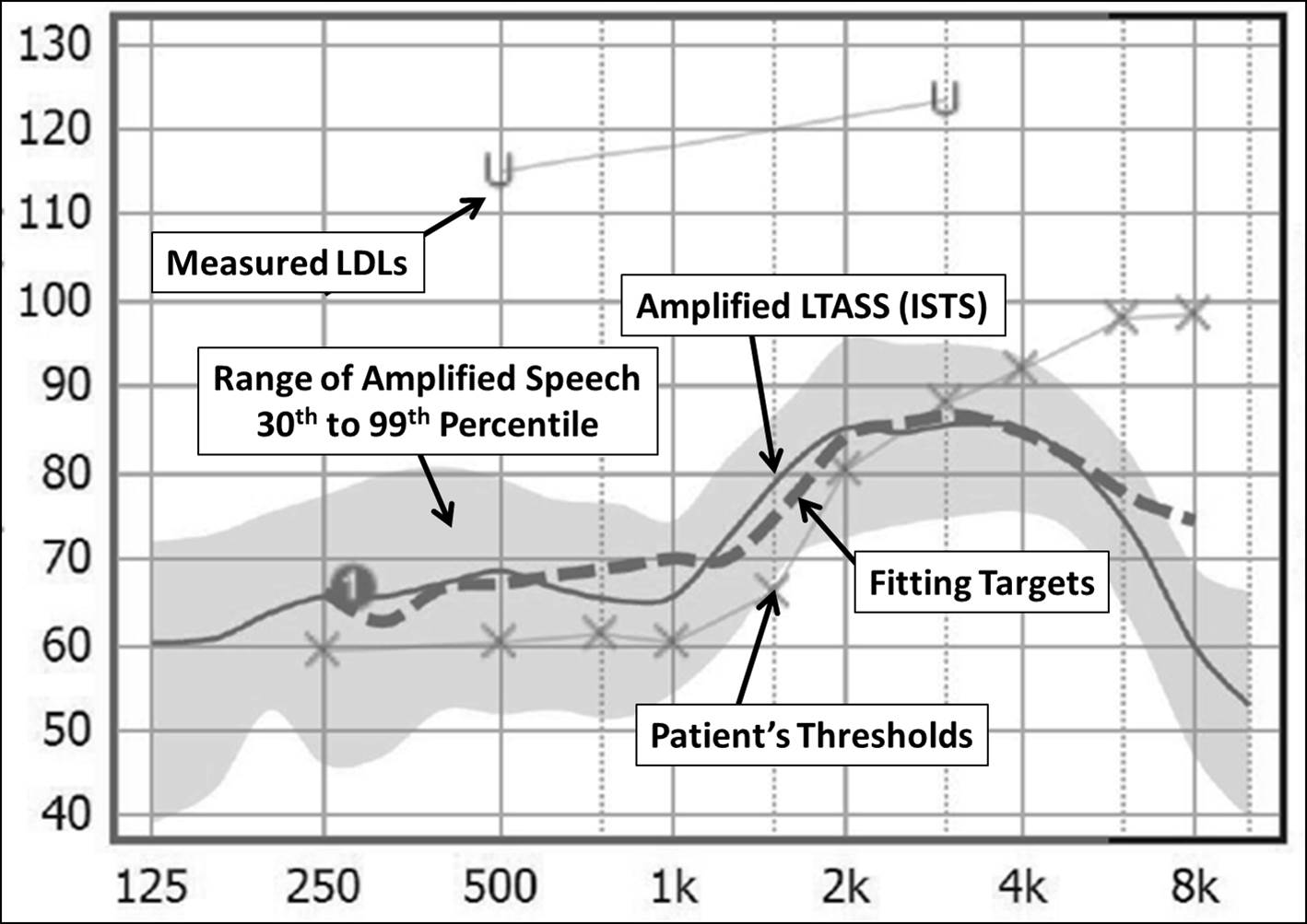

That's an important question and takes us to the verification portion of the total fitting process. It clearly is the most important component. The best product with all the latest bells and whistles will be rendered mostly useless if it’s not programmed correctly. You mention audibility, but we also need to consider loudness restoration, sound quality, listener comfort and acceptability. We could maximize audibility, but the patient probably wouldn’t use the hearing aids as there would be way too much high-frequency emphasis. We usually can improve sound quality by adding in more low-frequency gain, but often, this then reduces speech understanding in background noise. It’s always a compromise.

14. So how do you know what’s best?

Fortunately, today’s clinical audiologists can thank a handful of key researchers, who over the course of 30-40 years, using data from thousands of hearing aid users, put all the factors related to the “fitting compromise” in a big bowl, stirred them up, and what came out was a couple recipes that work the best. We call these prescriptive fitting approaches and we use them to select the best gain and output for each patient based on the patient’s hearing thresholds and their loudness discomfort levels.

While many methods have been suggested over the years, two have risen to the top. These are from the National Acoustic Laboratories (NAL) in Australia and Western University in Ontario, Canada. The current version from the NAL is the NAL-NL2. The method from Western is the Desired Sensation Level (DSL), and the current version is the DSLv5.0. For adults, the gain and output recommended by the two different procedures is fairly similar. For children, the DSL tends to recommend more gain—as the name indicates, sensation level (audibility) is the goal, and we know that children developing speech and language need more audibility than adults who can often correctly predict syllables or words that they didn’t hear. In the U.S., the tendency is for audiologists to use the NAL when fitting adults, and the DSL for the pediatric population.

15. So audiologists have fitting software that automatically programs the correct prescriptive gain and output for the patient’s hearing aids?

Sort of. That is the starting point. Each major manufacturer has both the NAL and DSL fitting algorithms as an option for programming their products.

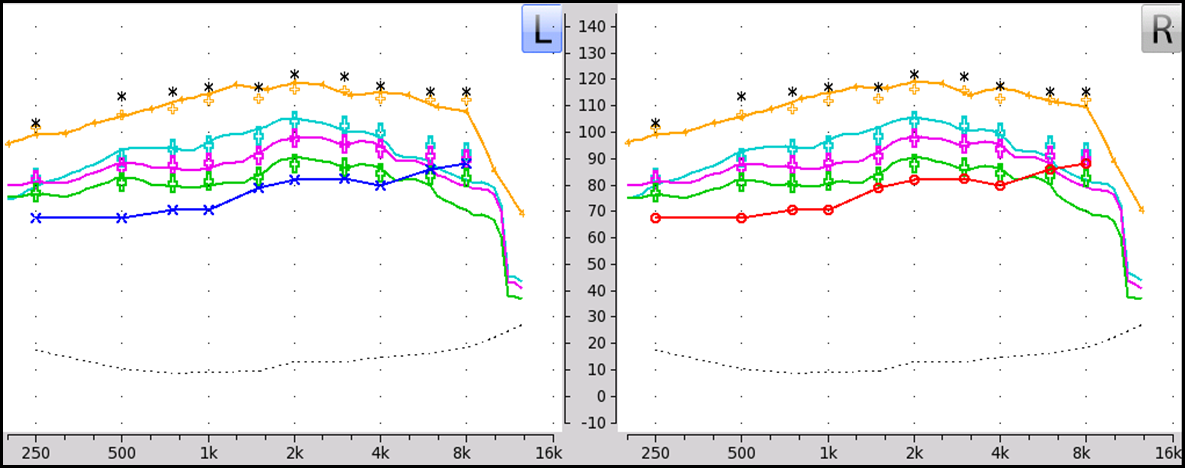

But as I stated, this is just the starting point. These automatic fittings often are not designed appropriately, and we also have differences from patient to patient because of ear canal size and configuration. Both the NAL and DSL “prescription” is for the SPL striking the eardrum, and therefore, this is the measure we need for verification. These values are obtained by using a miniature microphone placed in the ear canal near the tympanic membrane (within 5mm). This is referred to as probe-microphone measures (PMM), real-ear measures (REM) or speech mapping (see Mueller, Ricketts & Bentler 2017 for review of the technology and procedures). Real speech, shaped to the international LTASS, is delivered to the patient wearing the hearing aids at different input levels (e.g., soft, average, and loud). For each input level, the audiologist then programs the hearing aids so that the measured ear canal output matches the desired fitting target for that frequency. An example of how this works for a 65-dB-SPL speech input is shown in Figure 3. Everything is displayed in ear canal SPL, so you have to get used to the audiogram being upside down.

Figure 3. Typical screen display when conducting speech mapping for hearing aid verification. This graph shows the patient’s thresholds and LDLs converted to real-ear SPL, the fitting targets, and the measured aided speech spectrum. This display is for a 65-dB-SPL input. Normally, testing would also be conducted for soft (50-55 dB SPL) and loud (75-80 dB SPL) inputs, with output adjusted accordingly to match targets.

As I mentioned, speech mapping verification is needed for soft, average, and loud inputs, which is obtained by presenting a real-speech signal. After finishing the process, the information that we will then have is what you see on the fitting screen in Figure 4, which is a bilateral fitting for a child with a moderate-severe hearing loss. Remember that the output curves that you see here only represent the center of the amplified speech signal. The peaks of the speech for each level usually are 10-12 dB higher (refer back to Figure 3), so there is more speech audibility than appears from simply looking at the average. It is possible to display the full spectrum as an option during the measures, just not shown in this Figure.

Figure 4. Results following the adjustment of hearing aid parameters to meet prescriptive targets for a bilateral fitting. The lower line is the patient’s thresholds (red for right, blue for left) converted to ear canal SPL values. Testing was conducted for three different input levels: soft (green), average (pink) and loud (blue). The upper gold tracing is a measure of the hearing aid’s maximum power output (using a swept tone) conducted to ensure that the values do not exceed predicted loudness discomfort levels (asterisks).

16. Do you also conduct these real-ear speech mapping measures with infants and toddlers?

Indirectly. We use the probe-microphone equipment to conduct a test called the real-ear coupler difference (RECD). Using a small insert earphone, we compare the output of a calibrated signal in the child’s ear canal to that of the same signal presented in a 2-cc coupler. Once we know the frequency-specific RECDs, we then can place the hearing aid on the 2-cc coupler and make all the necessary corrections to fit to target, just as if we were doing the measures with the hearing aid on the child’s ear. Nearly always, the output in the real ear will be greater than in the 2-cc coupler. So for example, if the coupler DSL target for a 65-dB-SPL input signal at 2000 Hz was 88 dB, and we knew that the RECD was 8 dB, we would set the coupler output to 80 dB (because we know that the child’s ear will provide an 8 dB boost). Once we enter the frequency-specific RECDs in the software, all the corrections are conducted automatically by the test box equipment. This is sometimes referred to as a simulated REM fitting, or sREM.

17. What about conducting aided threshold testing in an audiometric test booth?

For standard hearing aid fittings, for both adults and children, that’s really a test that rarely is conducted these days. It never was very reliable because of head movements, masking effects, and elimination of the non-test ear. And, face validity was poor, as thresholds in a test booth do not represent thresholds in the real world where a greater amount of ambient noise is present. With modern hearing aids, there are added problems, as these instruments have automatic signal processing that is designed to squash soft inputs (since it’s usually background noise), making it nearly impossible to determine an exact aided threshold. The pediatric hearing aid fitting guidelines from the American Academy of Audiology state it quite clearly: “Measurement of aided sound field thresholds should not be used as a method of hearing aid verification.”

The good news is that we don’t need these aided sound field thresholds, as all the important information is available from our real-ear measures. Recall that one of the tests is using a soft input, so we know if soft speech is audible (see Figure 4). Additionally, remember our earlier discussion of the SII? The real-ear equipment automatically calculates the aided SII for each input level that was tested. In Figure 5, we have the aided SIIs for the patient whose real-ear results are shown in Figure 4. Note that we have both the unaided and aided values for the three different input levels.

Figure 5. Aided SII values for the three different input levels for the fitting displayed in Figure 4 (left ear is on the left; right ear on the right).

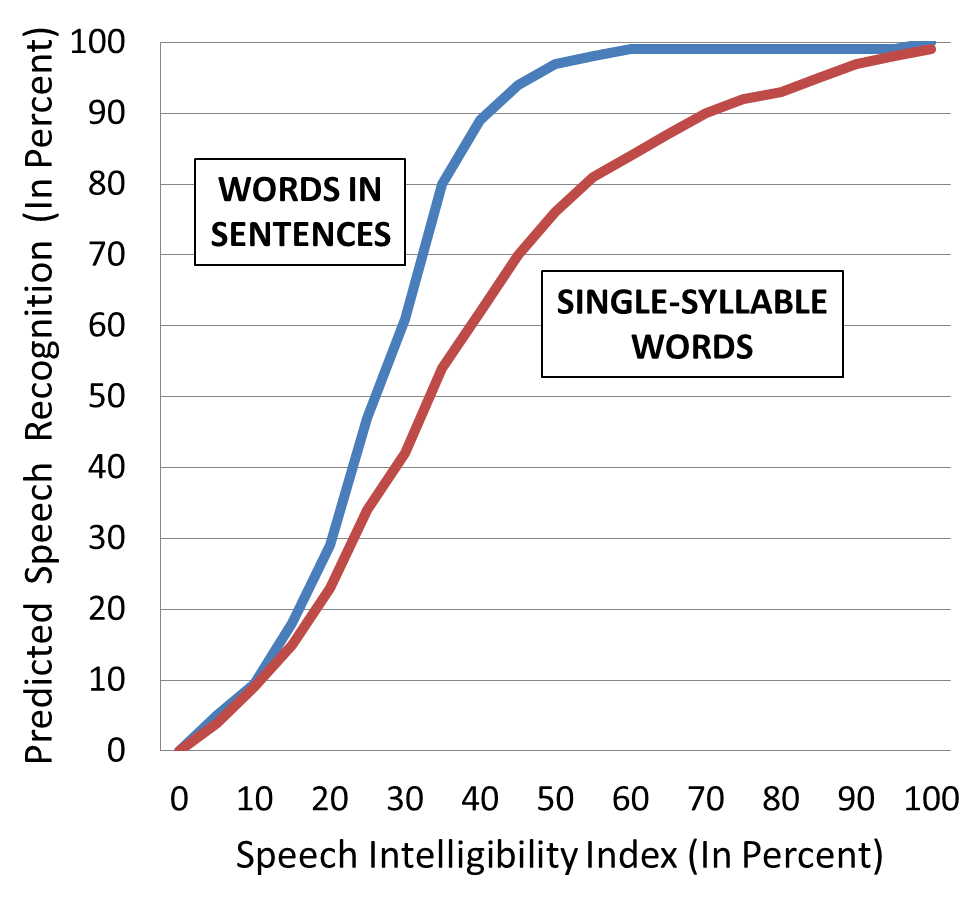

As I mentioned before, we can use SII values to predict speech understanding (in quiet). The outcome does depend on the speech material. An example of a conversion chart is shown in Figure 6, where we have performance functions for both words in sentences and words in isolation. As you would expect, the function for words in sentences is steeper, as we can predict some of the words based on context, and do not need as much audibility.

Figure 6. Example of conversion chart that relates the SII (X-axis) to predicted speech recognition (Y-axis). Conversions are available for several different types of speech material. The graph shows predicted performance functions for words in sentences and single-syllable words in isolation.

To illustrate how this could be used clinically, if a patient’s unaided SII was 20%, and his aided SII (for an average input) was 60%, we would predict that his performance for words in sentences would go from ~30% (unaided) to ~100% (aided) and his performance for words in isolation would go from ~25% (unaided) to ~85% (aided). We could do the same calculations for soft and loud inputs.

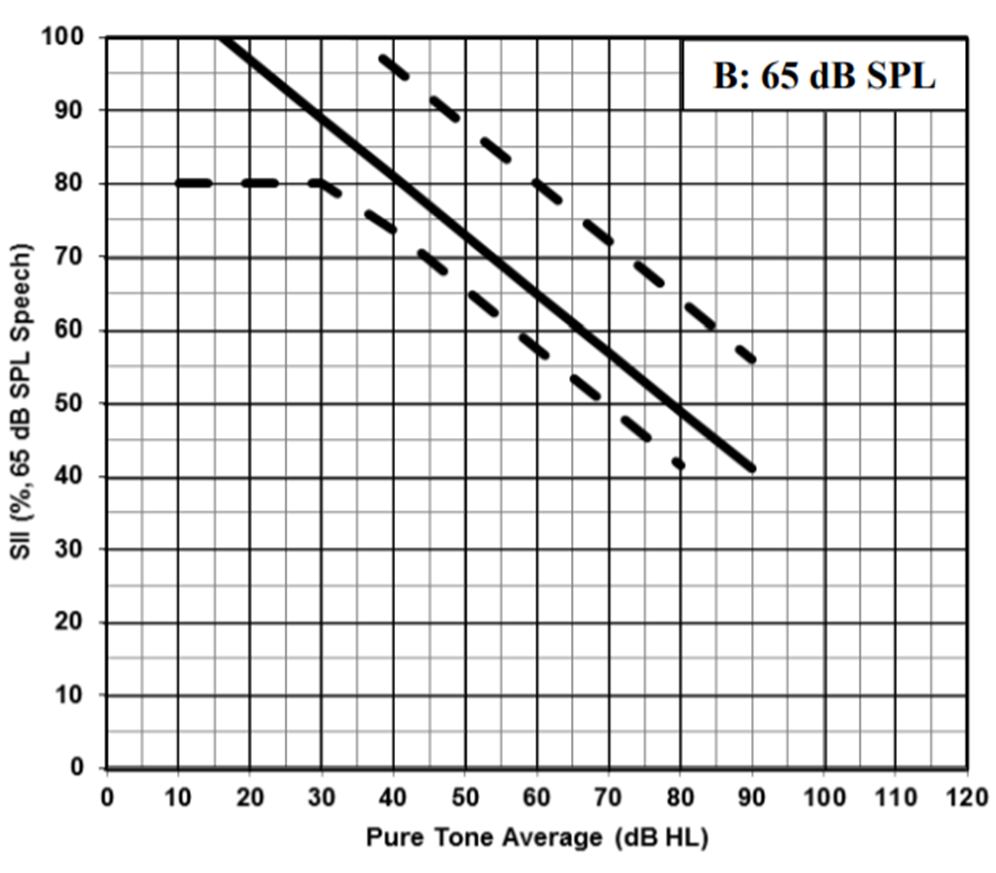

Audibility, of course, is the most critical is for pediatric fittings. The audiology folks at Western University have devised very useful SII charts that can be used to “grade” these hearing aid fitting. Based on the real-ear testing of several successful pediatric fittings, they have developed a set of acceptable SIIs, displayed in Figure 7 (for the 0-6 age range). Note that as the hearing loss becomes more severe (X-axis; average of hearing loss at 500, 1000, and 2000 Hz), the SIIs considered acceptable become smaller, simply because of the practical limits of amplification. The chart shown here is for an input of 65-dB-SPL.

Figure 7. Chart used to determine the acceptability of pediatric hearing aid fittings for a 65-dB-SPL real-speech signal. The average pure-tone hearing loss (500, 1000 and 2000 Hz), shown on the X-axis is compared to the real-ear aided SII (generated by the probe-microphone equipment), displayed on the Y-axis. The fitting is considered acceptable if the intersection falls within the boundaries shown on the chart. (From the Child Amplification Laboratory, National Centre for Audiology, The University of Western Ontario Pediatric Audiological Monitoring Protocol).

If we go back to Figure 5, we see this child had an SII for average inputs of .68 (right ear). His pure-tone average (in HL, not the values shown on the screen) was 62 dB. Using the chart shown in Figure 7, we see that the resulting intersection of these values is well within the acceptable fitting range. There is a similar chart for soft speech. You can find these charts, along with a lot of other good information regarding amplification for children here. https://www.uwo.ca/nca/pdfs/clinical_protocols/IHP_Amplification Protocol_2019.01.pdf

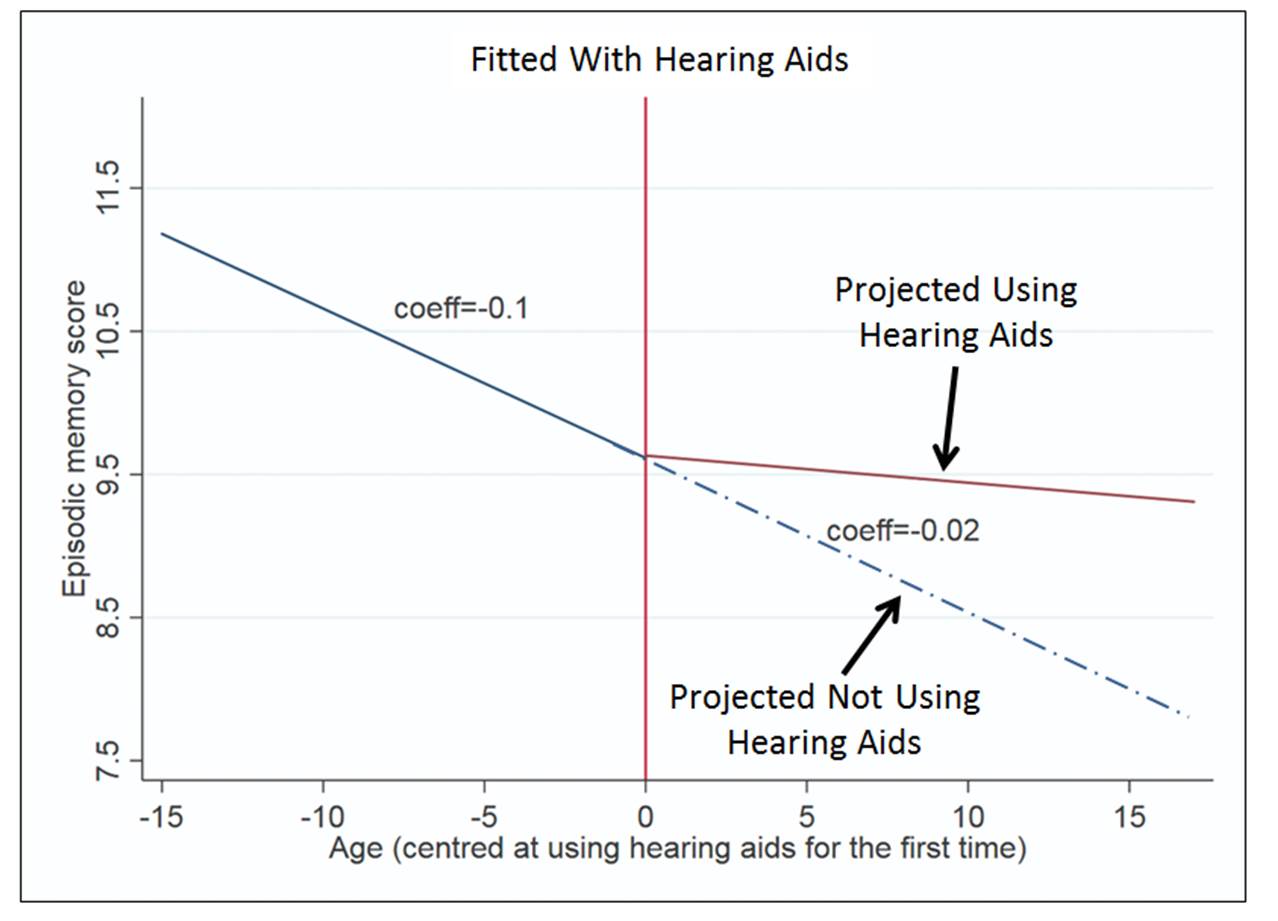

18. I’ll check it out. Since I’m almost out of questions, I want to change the subject somewhat and ask—what is the latest regarding the use of hearing aids and cognitive decline?

As you probably know, in recent years, considerable research has been conducted searching for the relationship between hearing loss and dementia. What we really don’t know is if it’s a true association, or is there possibly even a causation. What we do know is that there is some link, and that hearing loss impacts cognition, cognition impacts hearing, and common factors influence both hearing and cognition. One intriguing aspect of this research is the question of whether the fitting of hearing aids will change the predictable downward spiral of cognition for people with hearing loss. The answer might be “yes.” I’m referring to the recent findings of the large-scale Sense-Cog Project (Dawes, Maharani, Nazroo, Tampubolon, & Pendleton 2019). Trajectories of cognitive function were plotted based on memory test scores before and after using hearing aids. Adjusting for potential confounders—including gender, education, smoking, alcohol consumption, marital status, employment, physical activities, symptoms of depression, and number of health comorbidities—they found that the rate of cognitive decline on a memory test was slower following the adoption of hearing aids (see Figure 8). All good news, so stay tuned for further developments.

Figure 8. Projected rate of memory decline as a function of aging, and the projected change in the trajectory once hearing aids are obtained (the “0” point is when hearing aids were fitted).

19. Another hearing aid issue I’ve been hearing a lot about is the introduction of over-the-counter products. What’s the latest on that?

After several years of meetings among government and private groups regarding making hearing aids more available and affordable, the Over the Counter Hearing Aid Act was signed into law in August of 2017. The FDA was directed to develop documents to ensure that the OTC devices were safe and effective for people with mild-to-moderate hearing loss. We were told that we would see this official document within 3 years, so the release will be soon—it may be out by the time you read this. We do know that several large electronics companies (e.g., Bose, Samsung) have shown interest in putting out an OTC hearing aid product. All indications are that the products will be relatively high quality, and in some cases, will come with smartphone apps that will allow consumers to test and fit themselves.

I don’t think anyone really knows how this will impact hearing aid adoption and the overall hearing aid distribution system. There are estimates that over 50% of those who need hearing aids do not own them. Will the new OTC devices prompt them all to flock to Aisle 7 of their neighborhood Walgreen’s store? Or, are there issues other than price and convenience that are preventing them from using hearing aids? Will the new OTC devices have a huge impact on hearing aid sales by audiologists, or are these consumers a different market segment? Will audiologists make their counter the place to buy OTCs. It will be interesting to see how things play out.

20. Interesting times. Any closing comments?

We got a little side-tracked a couple of questions ago, so I do need to mention that the clinical verification is not the end of the fitting process. The final step is to conduct real-world validation. This usually occurs after 3 weeks or so of hearing aid use. The most common method is using a form called the COSI (Client Oriented Scale of Improvement). On the day of the fitting, the patient selects four or five listening situations where he or she would like to see improvement using hearing aids, determines importance ratings for the different settings, and then after a few weeks of hearing aids use, rates improvement. It’s very useful for assessing benefit and facilitating post-fitting counseling.

So that takes us to end of our conversation. Maybe more than you wanted to know? But, hope that some of this will be helpful when you see your next patient/client who wears hearing aids, be it an adult or a child.

References

Dawes P, Maharani A, Nazroo J, Tampubolon G, Pendleton N. (2019) Evidence that hearing aids could slow cognitive decline in later life. Hearing Review. 26(1):10-11.

Killion MC, Mueller HG (2010) Twenty years later: A NEW Count-The-Dots method. The Hearing Journal. 63 (1): 10-17.

Kujawa S. (2017) Cochlear Synaptopathy - Interrupting Communication from Ear to Brain. AudiologyOnline. Retrieved from www.AudiologyOnline.com

Mueller HG, Killion MC. (1990) An easy method for calculating the articulation index. Hearing Journal 43( 9): 14-17.

Mueller HG, Ricketts TA, Bentler R. (2014) Modern Hearing Aids: Pre-fitting testing and selection considerations. San Diego: Plural Publishing.

Mueller HG, Ricketts TA, Bentler R. (2017) Speech Mapping and Probe Microphone Measurements. San Diego: Plural Publishing.

Mueller HG, Jorgensen L. (2020) Hearing Aids for Speech-Languages Pathologists. San Diego: Plural Publishing.

Ricketts TA, Bentler R, Mueller HG. (2019) Essentials of Modern Hearing Aids. San Diego: Plural Publishing.

Citation

Mueller, G. (2020). 20Q: Modern Hearing Aids - A Primer for Speech-Language Pathologists. SpeechPathology.com, Article 20379. Retrieved from www.speechpathology.com